Primary metric dimensions¶

An important aspect of the EXPERImonitor’s support for experimental processes is the specification and delivery of various kinds of metrics that will form a significant component of the final analysis of a FMI system. The experiment data and monitoring processes offered by the EXPERImonitor focuses specifically on the generation and capture of quality of service (QoS), quality of experience (QoE) and quality of community (QoC) metrics. The characteristics of each dimension vary and are summarized in the table below.

Table 1 : Metric dimensions

| Dimension | Characteristics |

| QoS | Based on data from a manufactured sensor or computing machine, these measurements are based on objective and verifiable samples of the physical world. |

| QoE | A synthesis of data that can either be based on quantitative measures from the physical world (such as human-computer interaction logging) or on qualitative data that has been subjectively assessed by a human (an evaluation of the level of ‘immersion’, for example). |

| QoC | A synthesis of data, primarily based on objectively measurable features of a social network environment (such as the number of responses in an on-line dialogue). Other qualitative and subjective measures that may be inferred by humans or machines (trained by humans) may also be used (the application of sentiment analysis is one such example). |

A selection of metrics from each of these dimensions may be used by an experimenter to better understand how people and technology interact and perform during the course of an experiment.

EXPERImonitor metric model overview¶

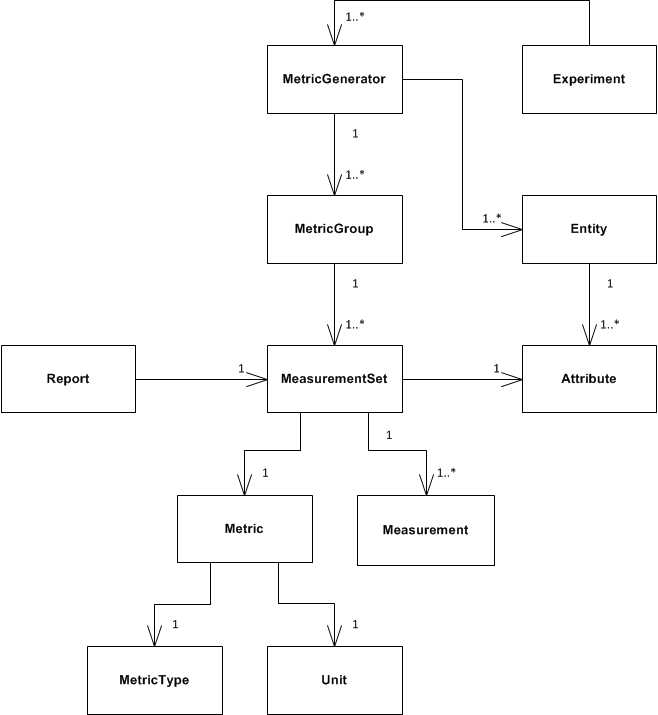

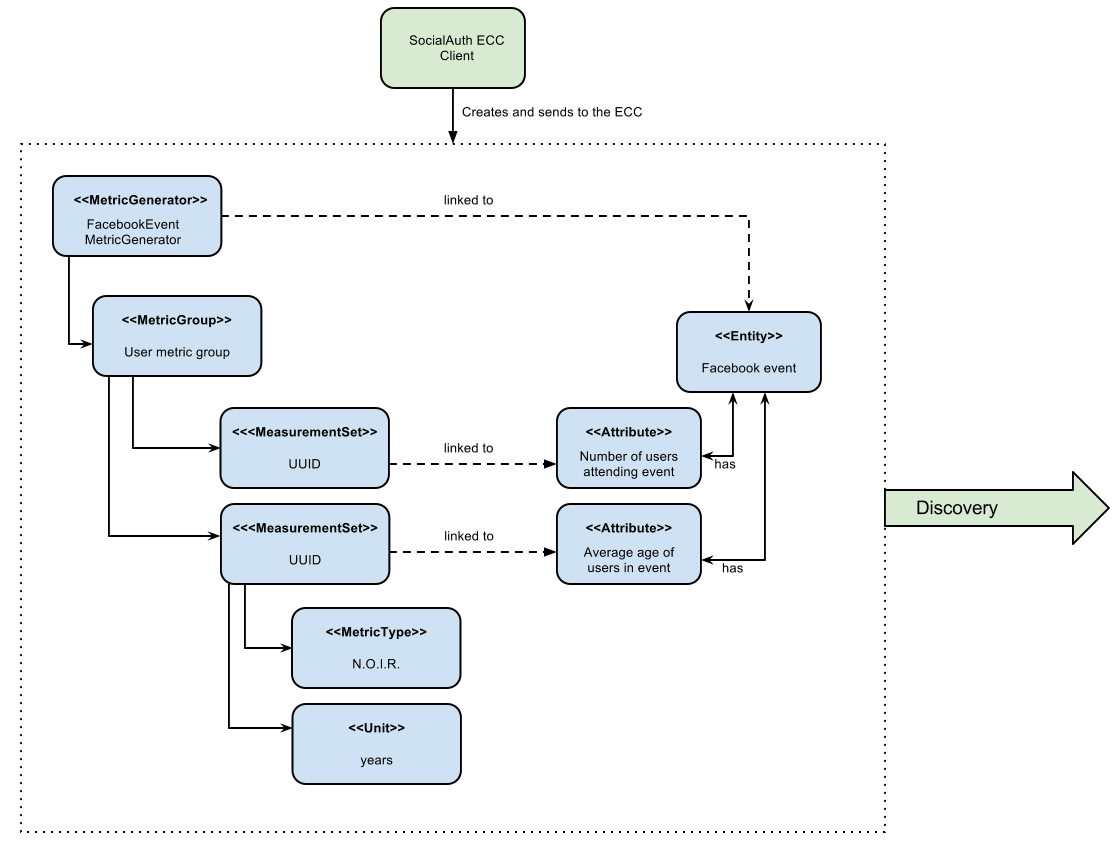

The EXPERImonitor offers a metric modelling framework that offers support for a range of potential QoS, QoE and QoC measurements, see the figure below.

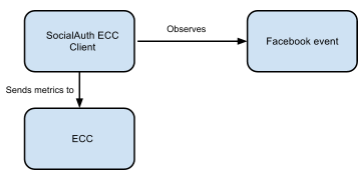

In this model, the objects of experimental observation (referred to as ‘Entities’) are de-coupled from the agent (the EXPERImonitor software client) making the observations. Entities themselves must contain one or more Attributes that are be the subject of actual instrumentation and measurement activity. A simple example of such a relationship is presented below in which an EXPERImonitor client (called ‘SocialAuth ECC client’) observes a Facebook event.

This very basic relationship need to be developed further however, since a) entities (in this case the ‘Facebook event’) will have certain attributes that are of interest to the client and the b) some organisation of the structure of the metric data associated with the entity must also be prescribed. To see how this is arranged, consider the figure below.

In this example, we have added two attribute instances to the entity, representing aspects of the Facebook we have an interest in observing (i) the number of users attending the event and (ii) the average age of users in the event. We can consider the data management structures that support the collection of data representing these two attributes from either a ‘top-down’ perspective (starting from Metric Generators) or from a ‘bottom-up’ view point, starting with a data collection type (the MeasurementSet type) that is mapped directly to an attribute of interest. For this example, we will take the latter approach and start by directly linking data sets to an attribute.

The Measurement Set type holds a set of measurements that specifically relate to an attribute and in addition has associated with it a metric meta-data indicating its Metric Type (nominal; ordinal; interval or ratio) and its Unit of measure. In the diagram above, we see two instances of Measurement Sets (each uniquely identified by a UUID value) which are mapped directly to the attributes of interest.

Table 2 : Example metrics

| Notes | Metric Group | Measurement Set ID | Metric Type | Metric Unit | Attribute | Entity |

| QoS examples for a media server running an FMI video streaming service. | Server QoS group | 2a6bb6b3-2465-4dc5-980b-cb8f78043a7a | RATIO | Milliseconds | PING network response | Media server |

| Server QoS group | ed3bf728-cd65-4bb3-8453-446f7e56c0f4 | RATIO | Frames/second | Video transcoding rate | Media server | |

| QoS example for a client connected to an FMI video streaming service. | Client QoS group | 328cadc6-afea-481a-9b49-9ca3a63ae252 | RATIO | Frames | Dropped frame count | Client receiver |

| QoS environment data example | Client QoS group | d8087fbe-ae37-4325-a8ee-79cffc99071c | INTERVAL | Celsius | Temperature | Client device |

| QoE video streaming experience report; a pre-defined 7 point Likert scale is used as a basis. | Client QoE group | 7620bf4b-0a51-41b8-9a17-870f2454cd78 | ORDINAL | Likert 7-scale | Perceived video smoothness | User |

| QoE interaction logging (Nominal ‘Action’ definitions should be pre-defined in a dictionary). | Client QoE group | 8fcfdf27-a51e-455a-8621-47e5fa4d264d | NOMINAL | Action | Video player interactions | User |

| QoC activity for FMI community | QoC community group | 5fb41674-490a-4bb8-be99-e20adf2fd7e1 | RATIO | Log-ins/day | User log-ins | FMI community |

| QOC activity for user of FMI community (URL to publicly available content) | QoC user group | 0b789291-4392-4288-95af-544486508a85 | NOMINAL | Content post | User content | Client device |

In the table above a number of illustrative examples of metrics from QoS, QoE and QoC domains are shown (with notes to offer context). Reading from left to right, we can see how groups of metric sets (each with an associated metric type and unit) are mapped to the entities under observation in the real or virtual world. To save space, metric generator mappings have not been included.

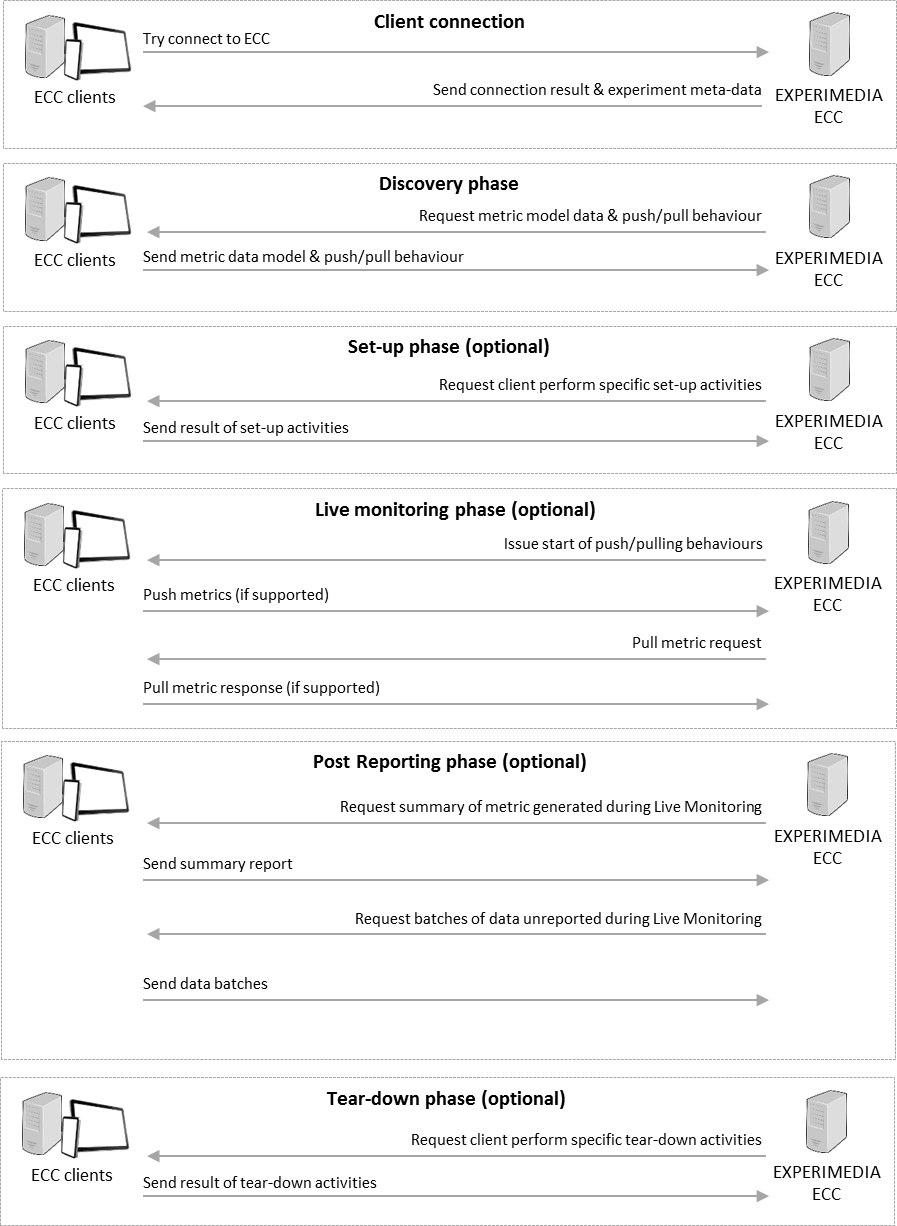

Moving up the data hierarchy, the next level of logical organisation is the Metric Group – a container used to perform one level of partitioning for collections of measurements that relate (for example, video rendering metrics). Metric Groups themselves are collected together by the top level data organisation, the Metric Generator. As previously indicated, the Metric Generator represents system-level components that generate metrics, for example it may be useful to differentiate server and client based metric generators. An additional mapping, similar to that used to link measurement data sets to attributes is specified linking metric generators to entities under observation since it is likely that individual systems will be deployed to observe different entity types. EXPERImonitor client software must send their specification of the metrics they are going to provide the EXPERImonitor in this way, during the Discovery phase. In this way, the experimenter has a means by which to understand which clients are performing what kind of measurements, and what they relate to within the experimental venue.